提示:擦净磁盘我们需要在后面接对应主机和磁盘;若设备上此前有数据,则可能需要在相应节点上以root用户使用“ceph-volume lvm zap --destroy {DEVICE}”命令进行;

添加osd

查看 ceph-deploy osd帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd --helpusage: ceph-deploy osd [-h] {list,create} ...Create OSDs from a data disk on a remote host:ceph-deploy osd create {node} --data /path/to/deviceFor bluestore, optional devices can be used::ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-deviceceph-deploy osd create {node} --data /path/to/data --block-wal /path/to/wal-deviceceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device --block-wal /path/to/wal-deviceFor filestore, the journal must be specified, as well as the objectstore::ceph-deploy osd create {node} --filestore --data /path/to/data --journal /path/to/journalFor data devices, it can be an existing logical volume in the format of:vg/lv, or a device. For other OSD components like wal, db, and journal, itcan be logical volume (in vg/lv format) or it must be a GPT partition.positional arguments:{list,create}listList OSD info from remote host(s)createCreate new Ceph OSD daemon by preparing and activating adeviceoptional arguments:-h, --helpshow this help message and exit[cephadm@ceph-admin ceph-cluster]$提示:ceph-deploy osd有两个子命令,list表示列出远程主机上osd;create表示创建一个新的ceph osd守护进程设备;

查看ceph-deploy osd create 帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd create --helpusage: ceph-deploy osd create [-h] [--data DATA] [--journal JOURNAL][--zap-disk] [--fs-type FS_TYPE] [--dmcrypt][--dmcrypt-key-dir KEYDIR] [--filestore][--bluestore] [--block-db BLOCK_DB][--block-wal BLOCK_WAL] [--debug][HOST]positional arguments:HOSTRemote host to connectoptional arguments:-h, --helpshow this help message and exit--data DATAThe OSD data logical volume (vg/lv) or absolute pathto device--journal JOURNALLogical Volume (vg/lv) or path to GPT partition--zap-diskDEPRECATED - cannot zap when creating an OSD--fs-type FS_TYPEfilesystem to use to format DEVICE (xfs, btrfs)--dmcryptuse dm-crypt on DEVICE--dmcrypt-key-dir KEYDIRdirectory where dm-crypt keys are stored--filestorefilestore objectstore--bluestorebluestore objectstore--block-db BLOCK_DBbluestore block.db path--block-wal BLOCK_WALbluestore block.wal path--debugEnable debug mode on remote ceph-volume calls[cephadm@ceph-admin提示:create可以指定数据盘,日志盘以及block-db盘和bluestore 日志盘等信息;

将ceph-mon01的/dev/sdb盘添加为集群osd

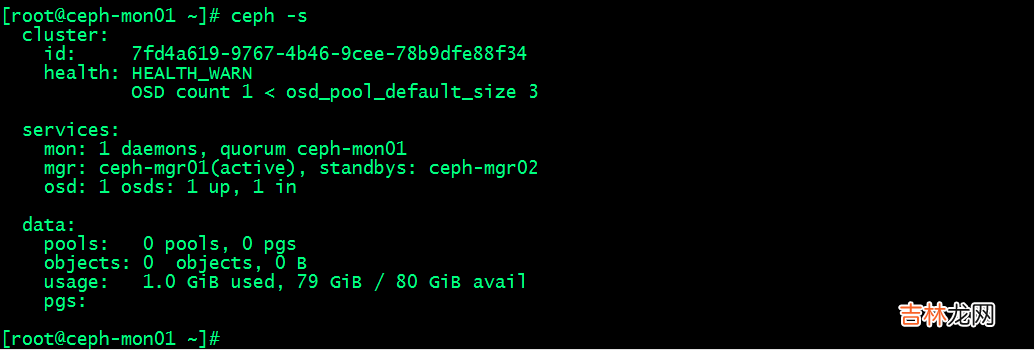

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd create ceph-mon01 --data /dev/sdb查看集群状态

文章插图

提示:可以看到现在集群osd有一个正常,存储空间为80G;说明我们刚才添加到osd已经成功;后续其他主机上的osd也是上述过程,先擦净磁盘,然后在添加为osd;

列出对应主机上的osd信息

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd list ceph-mon01[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf[ceph_deploy.cli][INFO] Invoked (2.0.1): /bin/ceph-deploy osd list ceph-mon01[ceph_deploy.cli][INFO] ceph-deploy options:[ceph_deploy.cli][INFO]username: None[ceph_deploy.cli][INFO]verbose: False[ceph_deploy.cli][INFO]debug: False[ceph_deploy.cli][INFO]overwrite_conf: False[ceph_deploy.cli][INFO]subcommand: list[ceph_deploy.cli][INFO]quiet: False[ceph_deploy.cli][INFO]cd_conf: <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f01148f9128>[ceph_deploy.cli][INFO]cluster: ceph[ceph_deploy.cli][INFO]host: ['ceph-mon01'][ceph_deploy.cli][INFO]func: <function osd at 0x7f011493d9b0>[ceph_deploy.cli][INFO]ceph_conf: None[ceph_deploy.cli][INFO]default_release: False[ceph-mon01][DEBUG ] connection detected need for sudo[ceph-mon01][DEBUG ] connected to host: ceph-mon01[ceph-mon01][DEBUG ] detect platform information from remote host[ceph-mon01][DEBUG ] detect machine type[ceph-mon01][DEBUG ] find the location of an executable[ceph_deploy.osd][INFO] Distro info: CentOS Linux 7.9.2009 Core[ceph_deploy.osd][DEBUG ] Listing disks on ceph-mon01...[ceph-mon01][DEBUG ] find the location of an executable[ceph-mon01][INFO] Running command: sudo /usr/sbin/ceph-volume lvm list[ceph-mon01][DEBUG ][ceph-mon01][DEBUG ][ceph-mon01][DEBUG ] ====== osd.0 =======[ceph-mon01][DEBUG ][ceph-mon01][DEBUG ][block]/dev/ceph-56cdba71-749f-4c01-8364-f5bdad0b8f8d/osd-block-538baff0-ed25-4e3f-9ed7-f228a7ca0086[ceph-mon01][DEBUG ][ceph-mon01][DEBUG ]block device/dev/ceph-56cdba71-749f-4c01-8364-f5bdad0b8f8d/osd-block-538baff0-ed25-4e3f-9ed7-f228a7ca0086[ceph-mon01][DEBUG ]block uuid40cRBg-53ZO-Dbho-wWo6-gNcJ-ZJJi-eZC6Vt[ceph-mon01][DEBUG ]cephx lockbox secret[ceph-mon01][DEBUG ]cluster fsid7fd4a619-9767-4b46-9cee-78b9dfe88f34[ceph-mon01][DEBUG ]cluster nameceph[ceph-mon01][DEBUG ]crush device classNone[ceph-mon01][DEBUG ]encrypted0[ceph-mon01][DEBUG ]osd fsid538baff0-ed25-4e3f-9ed7-f228a7ca0086[ceph-mon01][DEBUG ]osd id0[ceph-mon01][DEBUG ]typeblock[ceph-mon01][DEBUG ]vdo0[ceph-mon01][DEBUG ]devices/dev/sdb[cephadm@ceph-admin ceph-cluster]$

经验总结扩展阅读

- 电脑启动不能进入系统

- ERP 系统的核心是什么?有什么作用?

- 分布式存储系统之Ceph基础

- 小米手机如何修改手机系统背景

- linux双系统启动

- 适合生产制造企业用的ERP系统有哪些?

- 制造业数字化转型,本土云ERP系统如何卡位?

- 手机系统崩溃是怎么回事?

- MES系统和ERP系统的区别是什么?

- MES系统与ERP系统信息集成有哪些原则?