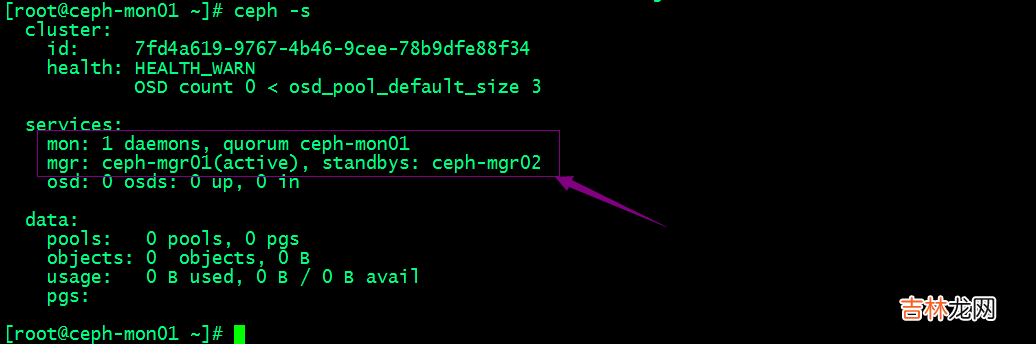

在集群节点上执行ceph -s来查看现在ceph集群的状态

文章插图

提示:可以看到现在集群有一个mon节点和两个mgr节点;mgr01处于当前活跃状态,mgr02处于备用状态;对应没有osd,所以集群状态显示health warning;

向RADOS集群添加OSD

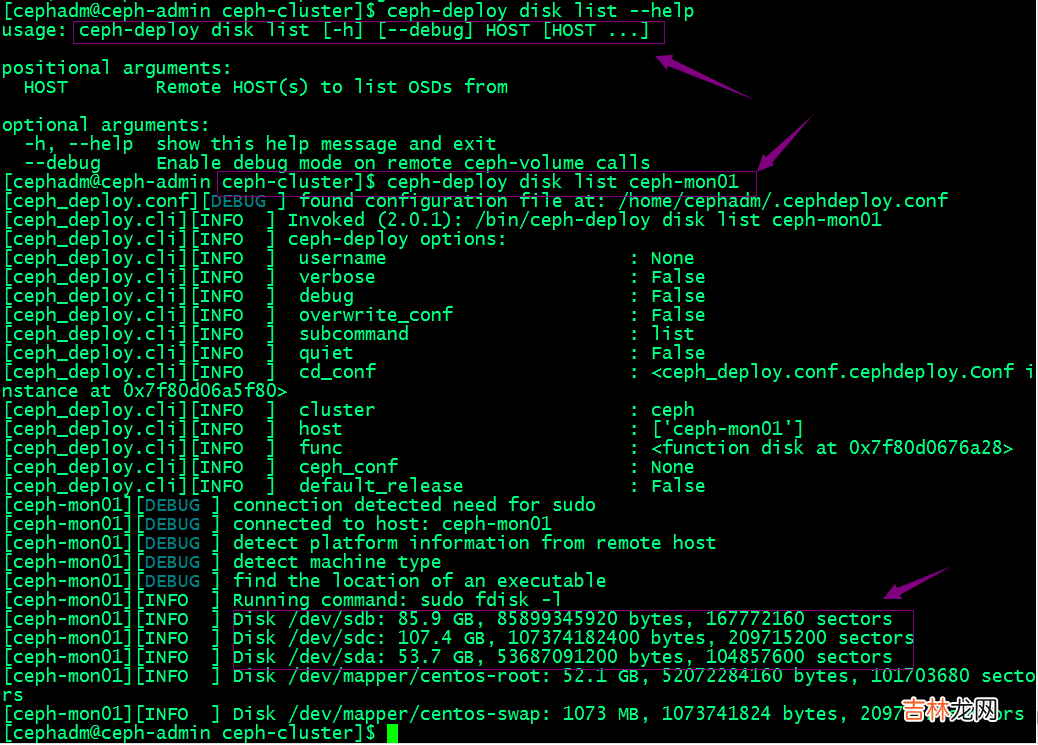

列出并擦净磁盘

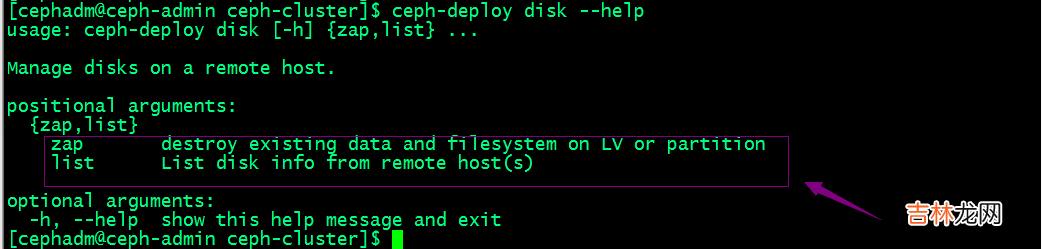

查看ceph-deploy disk命令的帮助

文章插图

提示:ceph-deploy disk命令有两个子命令,list表示列出对应主机上的磁盘;zap表示擦净对应主机上的磁盘;

文章插图

擦净mon01的sdb和sdc

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy disk zap --helpusage: ceph-deploy disk zap [-h] [--debug] [HOST] DISK [DISK ...]positional arguments:HOSTRemote HOST(s) to connectDISKDisk(s) to zapoptional arguments:-h, --helpshow this help message and exit--debugEnable debug mode on remote ceph-volume calls[cephadm@ceph-admin ceph-cluster]$ ceph-deploy disk zap ceph-mon01 /dev/sdb /dev/sdc[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf[ceph_deploy.cli][INFO] Invoked (2.0.1): /bin/ceph-deploy disk zap ceph-mon01 /dev/sdb /dev/sdc[ceph_deploy.cli][INFO] ceph-deploy options:[ceph_deploy.cli][INFO]username: None[ceph_deploy.cli][INFO]verbose: False[ceph_deploy.cli][INFO]debug: False[ceph_deploy.cli][INFO]overwrite_conf: False[ceph_deploy.cli][INFO]subcommand: zap[ceph_deploy.cli][INFO]quiet: False[ceph_deploy.cli][INFO]cd_conf: <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f35f8500f80>[ceph_deploy.cli][INFO]cluster: ceph[ceph_deploy.cli][INFO]host: ceph-mon01[ceph_deploy.cli][INFO]func: <function disk at 0x7f35f84d1a28>[ceph_deploy.cli][INFO]ceph_conf: None[ceph_deploy.cli][INFO]default_release: False[ceph_deploy.cli][INFO]disk: ['/dev/sdb', '/dev/sdc'][ceph_deploy.osd][DEBUG ] zapping /dev/sdb on ceph-mon01[ceph-mon01][DEBUG ] connection detected need for sudo[ceph-mon01][DEBUG ] connected to host: ceph-mon01[ceph-mon01][DEBUG ] detect platform information from remote host[ceph-mon01][DEBUG ] detect machine type[ceph-mon01][DEBUG ] find the location of an executable[ceph_deploy.osd][INFO] Distro info: CentOS Linux 7.9.2009 Core[ceph-mon01][DEBUG ] zeroing last few blocks of device[ceph-mon01][DEBUG ] find the location of an executable[ceph-mon01][INFO] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdb[ceph-mon01][WARNIN] --> Zapping: /dev/sdb[ceph-mon01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table[ceph-mon01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync[ceph-mon01][WARNIN]stderr: 10+0 records in[ceph-mon01][WARNIN] 10+0 records out[ceph-mon01][WARNIN]stderr: 10485760 bytes (10 MB) copied, 0.0721997 s, 145 MB/s[ceph-mon01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb>[ceph_deploy.osd][DEBUG ] zapping /dev/sdc on ceph-mon01[ceph-mon01][DEBUG ] connection detected need for sudo[ceph-mon01][DEBUG ] connected to host: ceph-mon01[ceph-mon01][DEBUG ] detect platform information from remote host[ceph-mon01][DEBUG ] detect machine type[ceph-mon01][DEBUG ] find the location of an executable[ceph_deploy.osd][INFO] Distro info: CentOS Linux 7.9.2009 Core[ceph-mon01][DEBUG ] zeroing last few blocks of device[ceph-mon01][DEBUG ] find the location of an executable[ceph-mon01][INFO] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdc[ceph-mon01][WARNIN] --> Zapping: /dev/sdc[ceph-mon01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table[ceph-mon01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdc bs=1M count=10 conv=fsync[ceph-mon01][WARNIN]stderr: 10+0 records in[ceph-mon01][WARNIN] 10+0 records out[ceph-mon01][WARNIN] 10485760 bytes (10 MB) copied[ceph-mon01][WARNIN]stderr: , 0.0849861 s, 123 MB/s[ceph-mon01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdc>[cephadm@ceph-admin ceph-cluster]$

经验总结扩展阅读

- 电脑启动不能进入系统

- ERP 系统的核心是什么?有什么作用?

- 分布式存储系统之Ceph基础

- 小米手机如何修改手机系统背景

- linux双系统启动

- 适合生产制造企业用的ERP系统有哪些?

- 制造业数字化转型,本土云ERP系统如何卡位?

- 手机系统崩溃是怎么回事?

- MES系统和ERP系统的区别是什么?

- MES系统与ERP系统信息集成有哪些原则?