提示:到此我们RADOS集群相关组件就都部署完毕了;

管理osd ceph命令查看osd相关信息

1、查看osd状态

[root@ceph-mon01 ~]#ceph osd stat10 osds: 10 up, 10 in; epoch: e56提示:osds表示现有集群里osd总数;up表示活动在线的osd数量,in表示在集群内的osd数量;

2、查看osd编号

[root@ceph-mon01 ~]# ceph osd ls0123456789[root@ceph-mon01 ~]#3、查看osd映射状态

[root@ceph-mon01 ~]# ceph osd dumpepoch 56fsid 7fd4a619-9767-4b46-9cee-78b9dfe88f34created 2022-09-24 00:36:13.639715modified 2022-09-24 02:29:38.086464flags sortbitwise,recovery_deletes,purged_snapdirscrush_version 25full_ratio 0.95backfillfull_ratio 0.9nearfull_ratio 0.85require_min_compat_client jewelmin_compat_client jewelrequire_osd_release mimicpool 1 'testpool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 16 pgp_num 16 last_change 42 flags hashpspool stripe_width 0max_osd 10osd.0 upinweight 1 up_from 55 up_thru 0 down_at 0 last_clean_interval [0,0) 192.168.0.71:6800/52355 172.16.30.71:6800/52355 172.16.30.71:6801/52355 192.168.0.71:6801/52355 exists,up bf3649af-e3f4-41a2-a5ce-8f1a316d344eosd.1 upinweight 1 up_from 9 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.71:6802/49913 172.16.30.71:6802/49913 172.16.30.71:6803/49913 192.168.0.71:6803/49913 exists,up 7293a12a-7b4e-4c86-82dc-0acc15c3349eosd.2 upinweight 1 up_from 13 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.72:6800/48196 172.16.30.72:6800/48196 172.16.30.72:6801/48196 192.168.0.72:6801/48196 exists,up 96c437c5-8e82-4486-910f-9e98d195e4f9osd.3 upinweight 1 up_from 17 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.72:6802/48679 172.16.30.72:6802/48679 172.16.30.72:6803/48679 192.168.0.72:6803/48679 exists,up 4659d2a9-09c7-49d5-bce0-4d2e65f5198cosd.4 upinweight 1 up_from 21 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.73:6800/48122 172.16.30.73:6800/48122 172.16.30.73:6801/48122 192.168.0.73:6801/48122 exists,up de019aa8-3d2a-4079-a99e-ec2da2d4edb9osd.5 upinweight 1 up_from 25 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.73:6802/48601 172.16.30.73:6802/48601 172.16.30.73:6803/48601 192.168.0.73:6803/48601 exists,up 119c8748-af3b-4ac4-ac74-6171c90c82ccosd.6 upinweight 1 up_from 29 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.74:6801/58248 172.16.30.74:6800/58248 172.16.30.74:6801/58248 192.168.0.74:6802/58248 exists,up 08d8dd8b-cdfe-4338-83c0-b1e2b5c2a799osd.7 upinweight 1 up_from 33 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.74:6803/58727 172.16.30.74:6802/58727 172.16.30.74:6803/58727 192.168.0.74:6804/58727 exists,up 9de6cbd0-bb1b-49e9-835c-3e714a867393osd.8 upinweight 1 up_from 37 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.75:6800/48268 172.16.30.75:6800/48268 172.16.30.75:6801/48268 192.168.0.75:6801/48268 exists,up 63aaa0b8-4e52-4d74-82a8-fbbe7b48c837osd.9 upinweight 1 up_from 41 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.75:6802/48751 172.16.30.75:6802/48751 172.16.30.75:6803/48751 192.168.0.75:6803/48751 exists,up 6bf3204a-b64c-4808-a782-434a93ac578c[root@ceph-mon01 ~]#删除osd

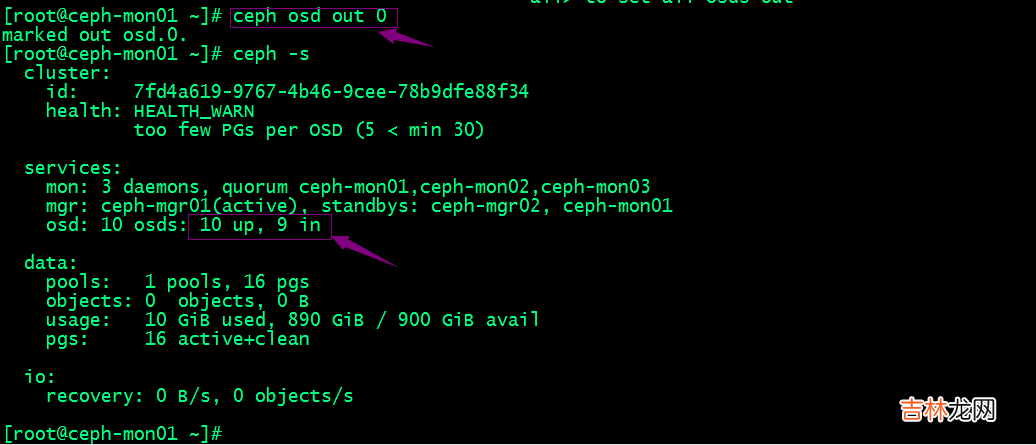

1、停用设备

文章插图

提示:可以看到我们将0号osd停用以后,对应集群状态里osd就只有9个在集群里;

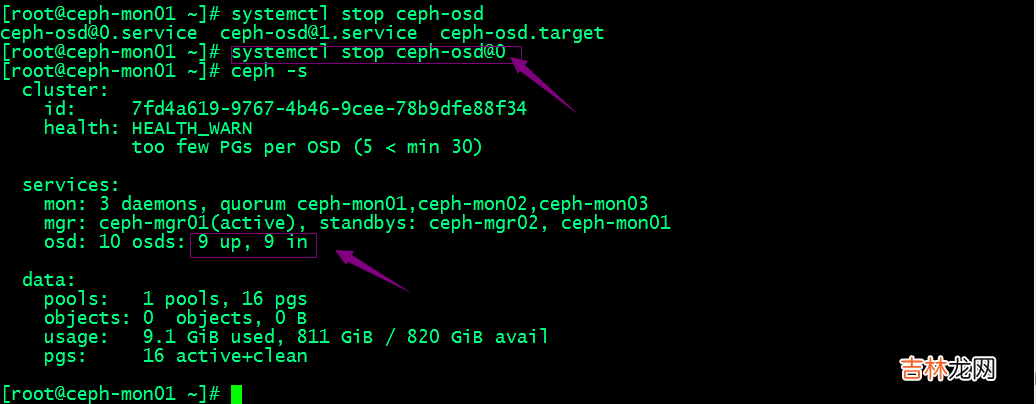

2、停止进程

文章插图

提示:停用进程需要在对应主机上停止ceph-osd@{osd-num};停止进程以后,对应集群状态就能看到对应只有9个osd进程处于up状态;

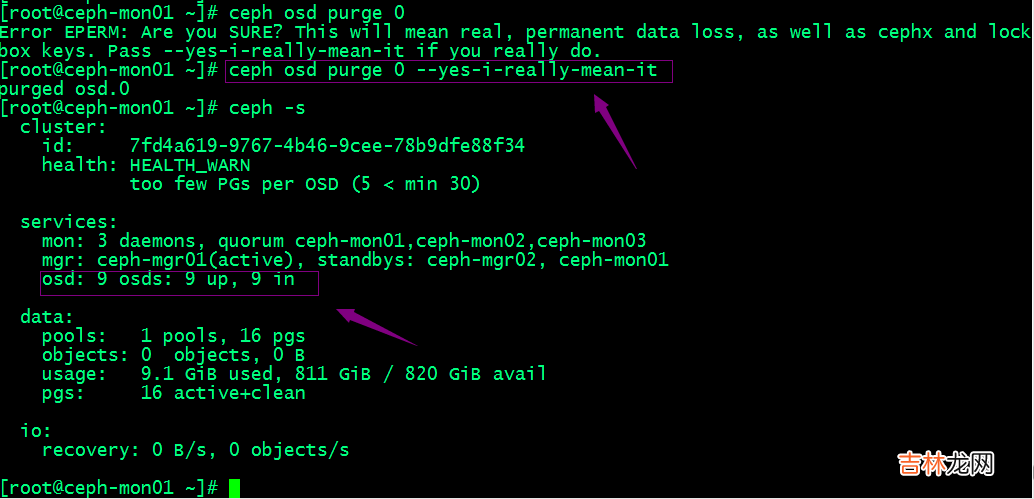

3、移除设备

文章插图

提示:可以看到移除osd以后,对应集群状态里就只有9个osd了;若类似如下的OSD的配置信息存在于ceph.conf配置文件中,管理员在删除OSD之后手动将其删除 。

[osd.1] host = {hostname}

经验总结扩展阅读

- 电脑启动不能进入系统

- ERP 系统的核心是什么?有什么作用?

- 分布式存储系统之Ceph基础

- 小米手机如何修改手机系统背景

- linux双系统启动

- 适合生产制造企业用的ERP系统有哪些?

- 制造业数字化转型,本土云ERP系统如何卡位?

- 手机系统崩溃是怎么回事?

- MES系统和ERP系统的区别是什么?

- MES系统与ERP系统信息集成有哪些原则?