PLAN 谣言检测——《Interpretable Rumor Detection in Microblogs by Attending to User Interactions》( 二 )

回顾:

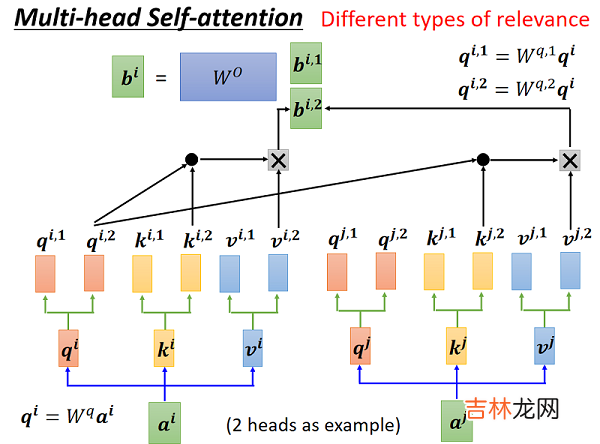

文章插图

2.4 Structure Aware Post-Level Attention Network (StA-PLAN)上述模型的问题:线性结构组织的推文容易失去结构信息 。

为了结合显示树结构的优势和自注意力机制,本文扩展了 PLAN 模型,来包含结构信息 。

$\begin{array}{l}\alpha_{i j}=\operatorname{softmax}\left(\frac{q_{i} k_{j}^{T}+a_{i j}^{K}}{\sqrt{d_{k}}}\right)\\z_{i}=\sum\limits _{j=1}^{n} \alpha_{i j}\left(v_{j}+a_{i j}^{V}\right)\end{array}$

其中,$a_{i j}^{V}$ 和 $a_{i j}^{K}$ 是代表上述五种结构关系(i.e. parent, child, before, after and self) 的向量 。

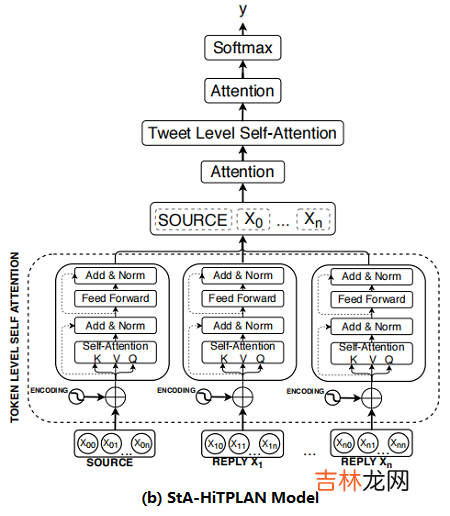

2.5 Structure Aware Hierarchical Token and Post-Level Attention Network (StA-HiTPLAN)本文的PLAN 模型使用 max-pooling 来得到每条推文的句子表示,然而比较理想的方法是允许模型学习单词向量的重要性 。因此,本文提出了一个层次注意模型—— attention at a token-level then at a post-level 。层次结构模型的概述如 Figure 2b 所示 。

文章插图

2.6 Time Delay Embeddingsource post 创建的时候,reply 一般是抱持怀疑的状态,而当 source post 发布了一段时间后,reply 有着较高的趋势显示 post 是虚假的 。因此,本文研究了 time delay information 对上述三种模型的影响 。

To include time delay information for each tweet, we bin the tweets based on their latency from the time the source tweet was created. We set the total number of time bins to be 100 and each bin represents a 10 minutes interval. Tweets with latency of more than 1,000 minutes would fall into the last time bin. We used the positional encoding formula introduced in the transformer network to encode each time bin. The time delay embedding would be added to the sentence embedding of tweet. The time delay embedding, TDE, for each tweet is:

$\begin{array}{l}\mathrm{TDE}_{\text {pos }, 2 i} &=&\sin \frac{\text { pos }}{10000^{2 i / d_{\text {model }}}} \\\mathrm{TDE}_{\text {pos }, 2 i+1} &=&\cos \frac{\text { pos }}{10000^{2 i / d_{\text {model }}}}\end{array}$

where pos represents the time bin each tweet fall into and $p o s \in[0,100)$, $i$ refers to the dimension and $d_{\text {model }}$ refers to the total number of dimensions of the model.

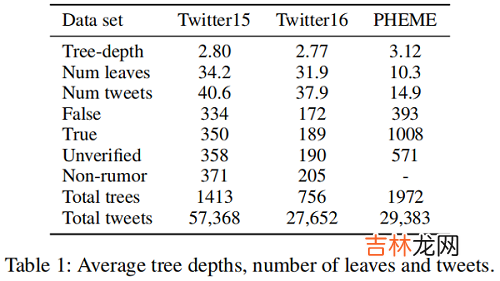

3 Experiments and Resultsdataset

文章插图

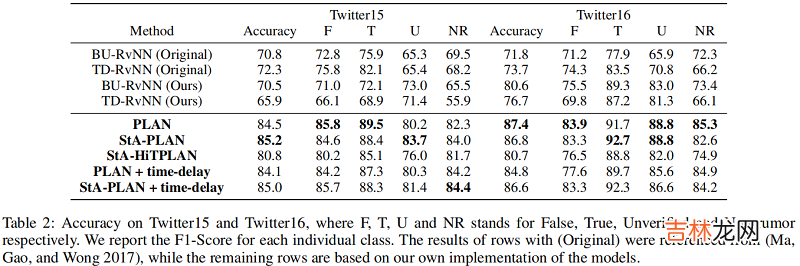

Result

文章插图

文章插图

Explaining the predictions Post-Level Explanations

首先通过最后的 attention 层获得最重要的推文 $tweet_{impt}$ ,然后从第 $i$ 个MHA层获得该层的与 $tweet_{impt}$ 最相关的推文 $tweet _{rel,i}$ ,每篇推文可能被识别成最相关的推文多次,最后按照 被识别的次数排序,取前三名作为源推文的解释 。举例如下:

文章插图

Token-Level Explanation

可以使用 token-level self-attention 的自注意力权重来进行 token-level 的解释 。比如评论 “@inky mark @CP24 as part of a co-op criminal investigation one would URL doesn’t need facts to write stories it appears.”中短语“facts to write stories it appears”表达了对源推文的质疑,下图的自注意力权重图可以看出大量权重集中在这一部分,这说明这个短语就可以作为一个解释:

经验总结扩展阅读

- .Net CLR GC plan_phase二叉树和Brick_table

- 谣言检测——《Debunking Rumors on Twitter with Tree Transformer》

- 如何检测手机

- 水质检测笔多少为正常

- 翅尖有毒是谣言吗

- 核酸检测阳性怎么办

- 自身 如何在linux下检测IP冲突

- 华为watch3pro支持血糖检测吗_华为watch3pro有测血糖功能吗

- 东莞机动车检测站周末上班吗

- Notebook交互式完成目标检测任务