论文信息

论文标题:Rumor Detection with Self-supervised Learning on Texts and Social Graph论文作者:Yuan Gao, Xiang Wang, Xiangnan He, Huamin Feng, Yongdong Zhang论文来源:2202,arXiv论文地址:download 论文代码:download1 Introduction出发点:考虑异构信息;

本文的贡献描述:看看就行...............................

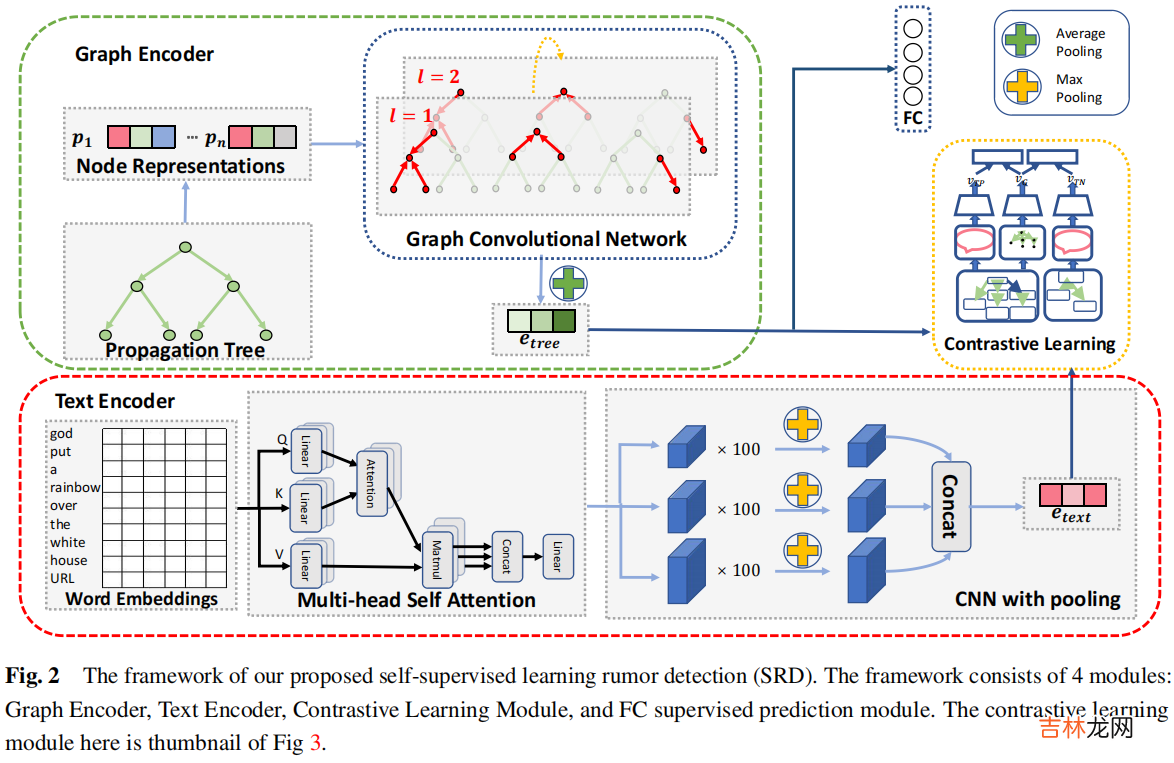

2 Methodology整体框架:

文章插图

模块:

(1) propagation representation learning, which applies a GNN model on the propagation tree;

(2) semantic representation learning, which employs a text CNN model on the post contents;

(3) contrastive learning, which models the co-occurring relations among propagation and semantic representations;

(4) rumor prediction, which builds a predictor model upon the event representations.

2.1 Propagation Representation Learning考虑结构特征

对于帖子特征编码:

$\mathbf{H}^{(l)}=\sigma\left(\mathbf{D}^{-\frac{1}{2}} \hat{\mathbf{A}} \mathbf{D}^{-\frac{1}{2}} \mathbf{H}^{(l-1)} \mathbf{W}^{(l)}\right)\quad\quad\quad(2)$

帖子图级表示:

$\mathbf{g}=f_{\text {mean-pooling }}\left(\mathbf{H}^{(L)}\right)\quad\quad\quad(3)$

2.2 Semantic Representation Learning考虑语义特征首先:在帖子特征上使用多头注意力机制得到初始词嵌入 $\mathbf{Z} \in \mathbb{R}^{l \times d_{\text {model }}}$ ($l$ 代表着帖子数,$d_{\text {model }}$ 代表帖子的维度):

$\boldsymbol{Z}_{i}=f_{\text {attention }}\left(\boldsymbol{Q}_{i}, \boldsymbol{K}_{i}, \boldsymbol{V}_{i}\right)=f_{\text {softmax }}\left(\frac{\boldsymbol{Q}_{i} \boldsymbol{K}_{i}^{T}}{\sqrt{d_{k}}}\right) \boldsymbol{V}_{i}\quad\quad\quad(4)$

$\boldsymbol{Z}=f_{\text {multi-head }}(\boldsymbol{Q}, \boldsymbol{K}, \boldsymbol{V})=f_{\text {concatenate }}\left(\boldsymbol{Z}_{1}, \ldots, \boldsymbol{Z}_{h}\right) \boldsymbol{W}^{O}\quad\quad\quad(5)$

接着:使用 CNN 进一步提取文本信息

考虑感受野大小为 $h$,得到 feature vector $\boldsymbol{v}_{i}$

$\boldsymbol{v}_{i}=\sigma\left(\boldsymbol{w} \cdot \boldsymbol{z}_{i: i+h-1}+\boldsymbol{b}\right)\quad\quad\quad(6)$

在 sentence 中遍历,得到词向量集合:

$\boldsymbol{v}=\left[\boldsymbol{v}_{1}, \boldsymbol{v}_{2}, \ldots, \boldsymbol{v}_{n-h+1}\right]$

在词向量集合 $\boldsymbol{v}$ 采用 max-pooling 得到全局表示 $\hat{\boldsymbol{v}}\quad\quad\quad(7)$:

$\hat{\boldsymbol{v}}=f_{\text {max-pooling }}(\boldsymbol{v})\quad\quad\quad(8)$

考虑使用 $n$ 个 feature map,并拼接表示得到文本表示 $\mathbf{t}$:

$\mathbf{t}=f_{\text {concatenate }}\left(\hat{\boldsymbol{v}}_{1}, \hat{\boldsymbol{v}}_{2}, \ldots, \hat{\boldsymbol{v}}_{n}\right)\quad\quad\quad(4)$

2.3 Contrastive Learning本文认为同一帖子的基于结构的表示 $\boldsymbol{g}_{i}$ 和基于语义 $\boldsymbol{t}_{i}$ 的表示是正对:

2.3.1 Propagation-Semantic Instance Discrimination (PSID)${\large \mathcal{L}_{\mathrm{ssl}}=\sum\limits_{i \in C}-\log \left[\frac{\exp \left(s\left(\boldsymbol{g}_{i}, \boldsymbol{t}_{i}\right) / \tau\right)}{\sum\limits _{j \in C} \exp \left(s\left(\boldsymbol{g}_{i}, \boldsymbol{t}_{j}\right) / \tau\right)}\right]} \quad\quad\quad(10)$

2.3.2 Propagation-Semantic Cluster Discrimination (PSCD)聚类级对比学习:

$\begin{array}{l}\underset{\mathbf{S}_{G}}{\text{min}}\quad \sum\limits _{c \in C} \underset{\mathbf{a}_{1}}{\text{min}} \left\|E_{1}(\mathbf{g})-\mathbf{S}_{G} \mathbf{a}_{1}\right\|_{2}^{2}+\underset{\mathbf{S}_{T}}{\text{min}} \sum\limits _{c \in C} \underset{\mathbf{a}_{2}}{\text{min}} \left\|E_{2}(\mathbf{t})-\mathbf{S}_{T} \mathbf{a}_{2}\right\|_{2}^{2}\\\text { s.t. } \quad \mathbf{a}_{1}^{\top} \mathbf{1}=1, \quad \mathbf{a}_{2}^{\top} \mathbf{1}=1\end{array}\quad\quad\quad(11)$

经验总结扩展阅读

- 《我的世界》豹猫怎么驯服怎么繁殖有什么用

- 《我的世界》1.14更新后如何驯服豹猫(我的世界1.16驯服猫成就)

- 《炉石传说》现版本用战士打巫妖王怎么打(炉石巫妖王全职业攻略)

- 《我的世界》豹猫怎么驯服,豹猫驯服技巧(我的世界豹猫怎么进化)

- 地表准四类水排放标准 准四类排放标准

- 亲爱的客栈杨紫乔欣是哪期?

- torch检测什么 TORCH是什么检查项目

- 京东上门换新是不是直接拿新的来换吗 京东上门换新不需要检测旧商品吗

- 向俊是什么电视剧中的人物?

- 乡村爱情小夜曲是第几部?