文章插图

文章插图

那么最后我们再展示一个聚合的简单案例,其实就是获取节点的近邻节点值的加和:

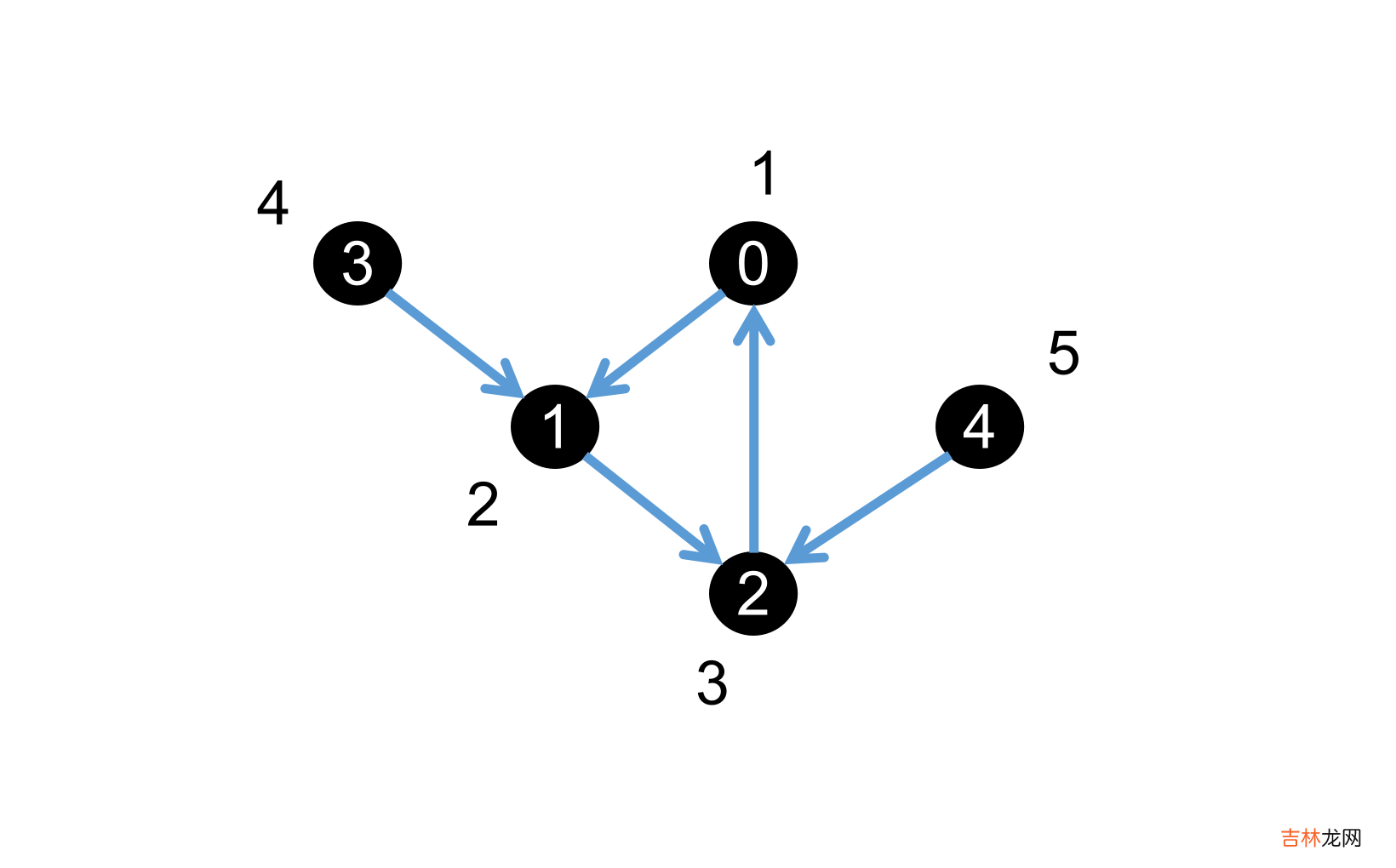

import mindspore as msfrom mindspore import opsfrom mindspore_gl import Graph, GraphFieldfrom mindspore_gl.nn import GNNCelln_nodes = 3n_edges = 3src_idx = ms.Tensor([0, 1, 2, 3, 4], ms.int32)dst_idx = ms.Tensor([1, 2, 0, 1, 2], ms.int32)graph_field = GraphField(src_idx, dst_idx, n_nodes, n_edges)node_feat = ms.Tensor([[1], [2], [3], [4], [5]], ms.float32)class GraphConvCell(GNNCell):def construct(self, x, y, g: Graph):g.set_src_attr({"hs": x})g.set_dst_attr({"hd": y})return [g.sum([u.hs for u in v.innbs]) for v in g.dst_vertex]ret = GraphConvCell()(node_feat[src_idx], node_feat[dst_idx], *graph_field.get_graph()).asnumpy().tolist()print (ret)那么这里只要使用一个graph.sum这样的接口就可以实现,非常的易写方便,代码可读性很高 。$ python3 test_msgl_01.py--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|def construct(self, x, y, g: Graph):1||1def construct(||||self,||||x,||||y,||||src_idx,||||dst_idx,||||n_nodes,||||n_edges,||||UNUSED_0=None,||||UNUSED_1=None,||||UNUSED_2=None||||):||||2SCATTER_ADD = ms.ops.TensorScatterAdd()||||3SCATTER_MAX = ms.ops.TensorScatterMax()||||4SCATTER_MIN = ms.ops.TensorScatterMin()||||5GATHER = ms.ops.Gather()||||6ZEROS = ms.ops.Zeros()||||7FILL = ms.ops.Fill()||||8MASKED_FILL = ms.ops.MaskedFill()||||9IS_INF = ms.ops.IsInf()||||10SHAPE = ms.ops.Shape()||||11RESHAPE = ms.ops.Reshape()||||12scatter_src_idx = RESHAPE(src_idx, (SHAPE(src_idx)[0], 1))||||13scatter_dst_idx = RESHAPE(dst_idx, (SHAPE(dst_idx)[0], 1))||g.set_src_attr({'hs': x})2||14hs, = [x]||g.set_dst_attr({'hd': y})3||15hd, = [y]||return [g.sum([u.hs for u in v.innbs]) for v in g.dst_vertex]4||16SCATTER_INPUT_SNAPSHOT1 = GATHER(hs, src_idx, 0)||||17return SCATTER_ADD(||||ZEROS(||||(n_nodes,) + SHAPE(SCATTER_INPUT_SNAPSHOT1)[1:],||||SCATTER_INPUT_SNAPSHOT1.dtype||||),||||scatter_dst_idx,||||SCATTER_INPUT_SNAPSHOT1||||)|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------[[3.0], [5.0], [7.0]]下图是上面这个案例所对应的拓扑图:

文章插图

总结概要对于从元素运算到矩阵运算再到张量运算,最后抽象到图运算,这个预算模式的发展历程,在每个阶段都需要有配套的工具来进行支持 。比如矩阵时代的numpy,张量时代的mindspore,还有图时代的mindspore-gl 。我们未必说哪种运算模式就一定更加先进,但是对于coder来说,“公式即代码”这是一个永恒的话题,而mindspore-gl在这一个工作上确实做的很好 。不仅仅是图模式的编程可读性更高,在GPU运算的性能上也有非常大的优化 。

版权声明本文首发链接为:https://www.cnblogs.com/dechinphy/p/mindspore_gl.html

作者ID:DechinPhy

更多原著文章请参考:https://www.cnblogs.com/dechinphy/

打赏专用链接:https://www.cnblogs.com/dechinphy/gallery/image/379634.html

腾讯云专栏同步:https://cloud.tencent.com/developer/column/91958

CSDN同步链接:https://blog.csdn.net/baidu_37157624?spm=1008.2028.3001.5343

51CTO同步链接:https://blog.51cto.com/u_15561675

参考链接

- https://gitee.com/mindspore/graphlearning

- https://www.bilibili.com/video/BV14a411976w/

- Seastar: Vertex-Centric Progamming for Graph Neural Networks. Yidi Wu and other co-authors.

- PGL Paddle Graph Learning 关于图计算&图学习的基础知识概览:前置知识点学习

- 论文笔记 - GRAD-MATCH: A Gradient Matching Based Data Subset Selection For Efficient Learning

- 论文笔记 - SIMILAR: Submodular Information Measures Based Active Learning In Realistic Scenarios

- 谣言检测《Rumor Detection with Self-supervised Learning on Texts and Social Graph》

- RDCL 谣言检测——《Towards Robust False Information Detection on Social Networks with Contrastive Learning》

- Learning Records JavaScript进阶

- GLA 论文解读《Label-invariant Augmentation for Semi-Supervised Graph Classification》

- GGD 论文解读《Rethinking and Scaling Up Graph Contrastive Learning: An Extremely Efficient Approach with Group Discrimination》

- Learning Records 计算机网络

- Nebula Graph介绍和SpringBoot环境连接和查询